We’re starting a series of articles focused on music innovation, and we’ve decided to begin with a general AI piece to set the stage. We also considered naming this article “Music AI for Dummies—BMAT Edition,” but we ended up opting for a more cautious approach.

If you’re an engineer or deeply engaged in AI research, coding, engineering, or a related field, this article may not unveil groundbreaking discoveries. Consider yourself warned, and feel free to enjoy its occasional puns and entertaining references.

Alan had AI dream

In 1950, Alan Turing published a paper entitled “Computing Machinery and Intelligence“, which opened the doors to what is now globally known as Artificial Intelligence (AI). 5 years later, a program called the Logic Theorist was presented that was designed to mimic the problem-solving skills of a human. This is often considered to be the first artificial intelligence program. Since then, considerable effort has been dedicated to AI, mainly in the academic field.

So, what is AI Music

AI music is music that is composed, produced, or generated with the assistance of artificial intelligence (AI) technologies. AI-generated music platforms are like smart synthesisers that make their own music using artificial intelligence. They’re trained on loads of music data like vocals, chords, and rhythms, to create new songs by themselves.

How does AI music work

It uses algorithms, Machine Learning and other computational methods to analyse musical data, understand musical patterns and come up with original tunes. So, instead of having a human composer, you’ve got a computer doing the job. AI music can cover many creative outputs, from simple melodies to complex compositions that sound like your favourite artists made them.

Like this song composed with AI in the style of the Beatles called Daddy’s Car. Dropped 7 years ago, it was among the first examples we’ve encountered at an AI Song Contest that still runs today. It’s worth mentioning that although AI handled the composition, the song was brought to life by (human) musicians who took care of the performance, vocals, recording, and production.

What has changed since the beginning

At its core, not much. Most of the engines are symbolic, and none is still there yet – we said what we’ve said. On the marketing side, a lot has changed. Large Language Models have impressed the world and almost everyone now acknowledges that AI can replicate human intelligence and perform most of the human tasks that traditionally require human cognitive abilities, including creative projects.

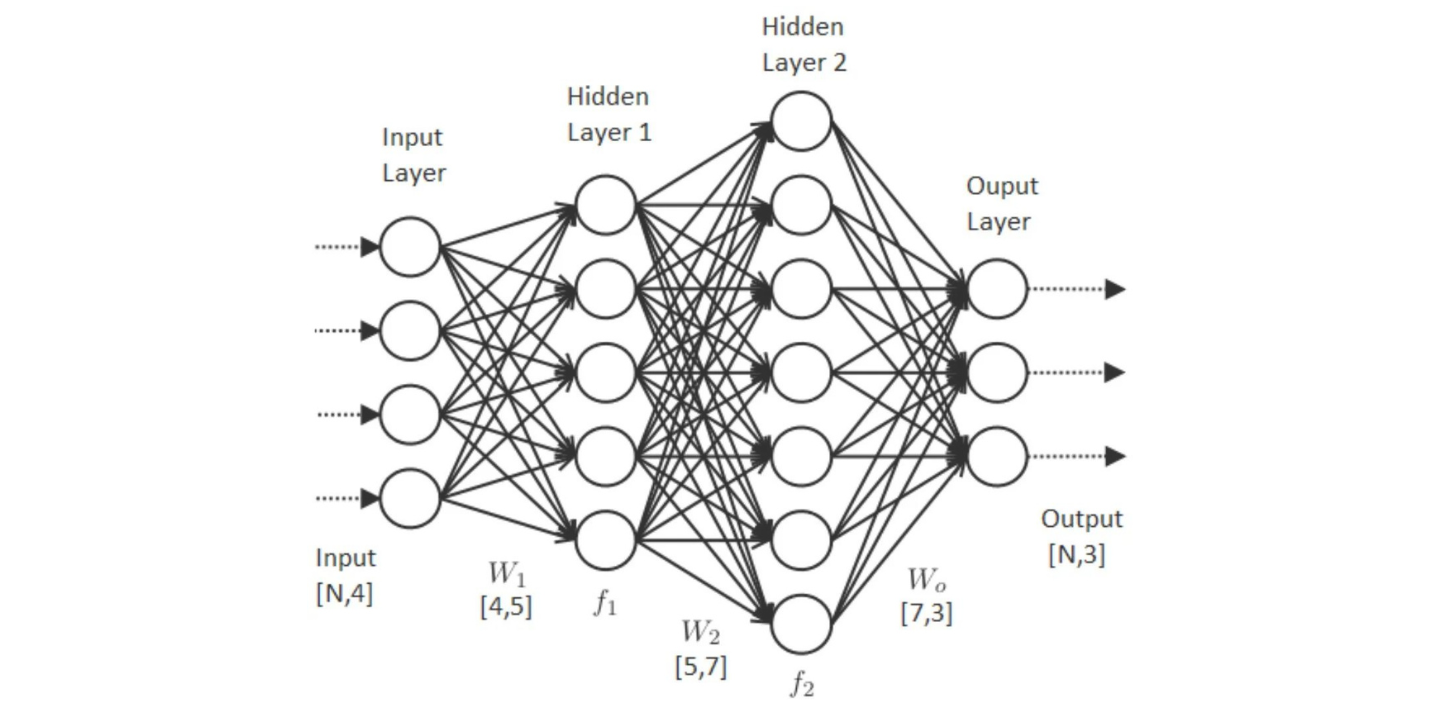

These models represent the progress enabled by Artificial Neural Networks (ANNs), computer systems made up of connected artificial neurons. Inspired by the intricate neural networks of the human brain, these artificial neurons are organised into layers with interconnected algorithms.

As ANNs grow and develop, they show how they can achieve things that were once thought only humans could do, like being creative.

What is and what isn’t gen AI

Generative AI music, which has created strong concerns amongst the industry in 2023, refers to a class of artificial intelligence techniques that involve generating new data, content, or outputs that are alike but not the same as the input data it was trained on. Still, most non-generative AI use cases in the music industry shouldn’t worry the creators.

AI operates through a complex interplay of algorithms, data, and computational power, processing vast amounts of data, recognising patterns within that data, and making decisions or predictions based on those patterns.

Since these AI algorithms can spot patterns and trends in vast amounts of data, they enable informed decision-making and can be very helpful to most actors in the value chain. Some existing use cases include user’s listening habits mapping by Digital Service Providers (DSP), assisted mixing and mastering, automated music tagging, metadata matching, data reconciliation, and many more.

For short, Generative AI music creates new content based on learned patterns, while non-generative AI assists in tasks like analysing listening habits and tagging music without generating new content.

Machine Deep Learning 101

One of the key components of AI is Machine Learning – a subset of AI that focuses on enabling computers to learn from data without being explicitly programmed. Machine Learning algorithms are trained on large datasets, where they iteratively adjust their parameters to improve performance on a specific task. This process allows AI systems to recognise patterns and make predictions or decisions with increasing accuracy over time.

Deep learning, a subfield of Machine Learning, has gained significant traction in recent years due to its ability to learn hierarchical representations of data automatically. Deep learning models, such as neural networks, consist of multiple layers of interconnected nodes that extract intricate features from raw data, enabling more sophisticated tasks like image recognition, natural language processing, and autonomous driving.

Cool cats, according to AI

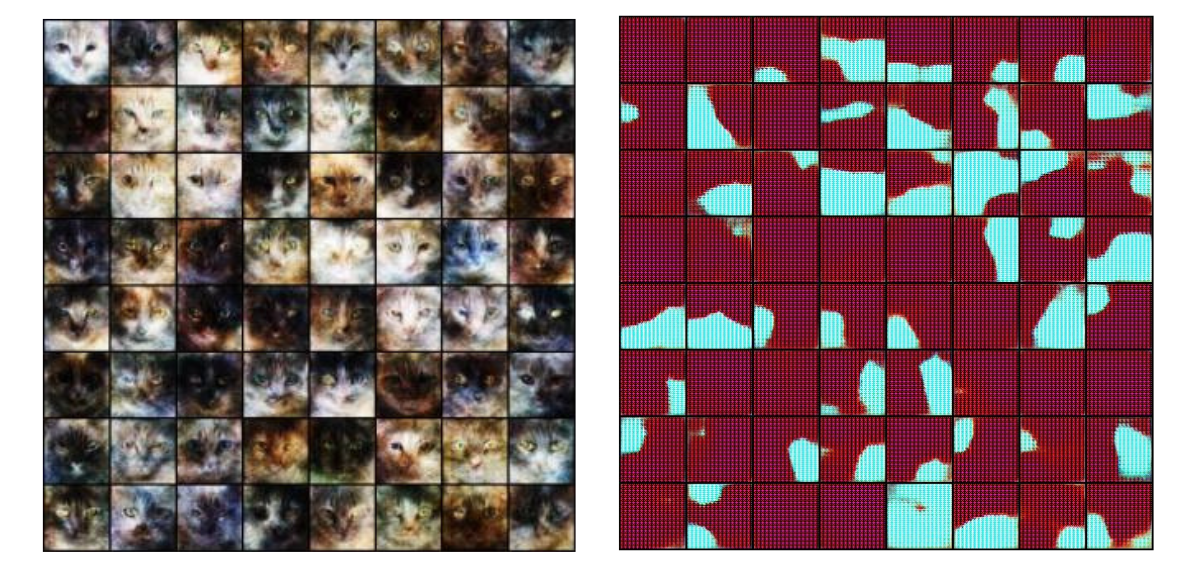

Image recognition is a good example of understanding the mechanics of deep learning. To train AI software on images of cats, the neural network’s input layer processes millions of raw image data of cats. It transforms this data into numerical values and passes it through subsequent network layers, each refining the input representation. As this process continues, the information in the hidden layers becomes increasingly abstract, losing direct correlation with the original image data.

A case in point is the Meow Generator, a collection of Machine-Learning algorithms that have been unleashing thousands of disturbing cat faces into the world – 15,749 of them, to be exact. These creepy cats are the design of Alexia Jolicoeur-Martineau, who started with a dataset of 10,000 cat pictures and focused on their faces. She used a generative adversarial network (GAN) to create new cat faces, which consist of two neural networks, one generating images and the other discerning their authenticity.

These networks were trained to recognize cat faces and generate new ones. The generator adapts based on the discriminator’s feedback until the generated images are almost indistinguishable from real ones – almost.

Eventually, the AI determines the relationship between the observed data and the desired output—such as recognising a cat in the final layer. Consequently, the reasoning behind the AI’s decision becomes opaque to human understanding, making it challenging for operators to trace how a specific result, like identifying a cat, is reached.

AI systems also utilise natural language processing (NLP) techniques to understand and generate human language, computer vision to interpret visual information from images or videos, and reinforcement learning to make sequential decisions through trial and error.

Everybody will be AI dancing, and BMAT will be doing it right

We and AI go back, and this serves as extra motivation for us to become a tech leader in that field for the music industry. As such, we are working on pioneering ideas such as the use of embeddings to match and homogenise music data and metadata databases.

Fundamentally, we’re exploring new AI techniques and applying them to entity resolution within the music industry to develop the best data-matching engine. This engine will optimise specific use cases and enable scalable back-end services. These include the creation of master databases to unify different versions of musical entities, establishing links between these entities, and identifying music compositions and phonograms within unstructured texts from platforms like YouTube.

In particular, we investigate and evaluate the use of so-called embeddings (vector representations of raw data that encapsulate its semantic meaning) for comparing entities and the use of Transformer-type neural networks. Embeddings can be generated from raw text, images, or audio.

At BMAT, we have trained an AI system able to encode the musical information of an audio file into an embedding and use them for massive cover song identification with very interesting results. Our preliminary experiments using a collection of 3 million songs achieved the expected results for standard covers, but we also observed promising side effects of the algorithm. We realised that we’ve built a system able to spot copyright infringements from a wide musical perspective. We plan to keep developing this idea to fully understand the capabilities of the technology for similar use cases.

The UPF-BMAT Chair on Artificial Intelligence and Music is another AI initiative in which BMAT is engaged. Think of it as a sturdy chair supporting the weight of innovation and creativity in the music industry in Spain.

In collaboration with UPF, this Chair involves researchers and staff from Music Technology Group and BMAT, establishing partnerships with institutions and experts in the national music sector. This includes applied research, software development, and creating advanced technologies beneficial to the sector, promoting open innovation through public-private collaboration.

And we also made sure that the Chair is as comfortable as a studio recliner for those long brainstorming sessions that await us.

This is (not) the end, my friend

If you’re worried that AI will destroy the music industry, chill. It’s like giving a fish, a highly intelligent one though, a bicycle – impressive, but not really doing the trick.

Or maybe you’re reading this from the future ROFL-ing. Only time will tell.

There’s a popular internet quote saying that if you want to know the future, you have to look at the past. Although there’s no evidence that Albert Einstein actually said this, hear us out.

After the release of The Jazz Singer in 1927, all bets were off for live musicians who played in movie theatres. Thanks to synchronised sound, the use of live musicians has become unnecessary at the movies, and all of a sudden, more than 80% of musicians in the industry were now out of work.

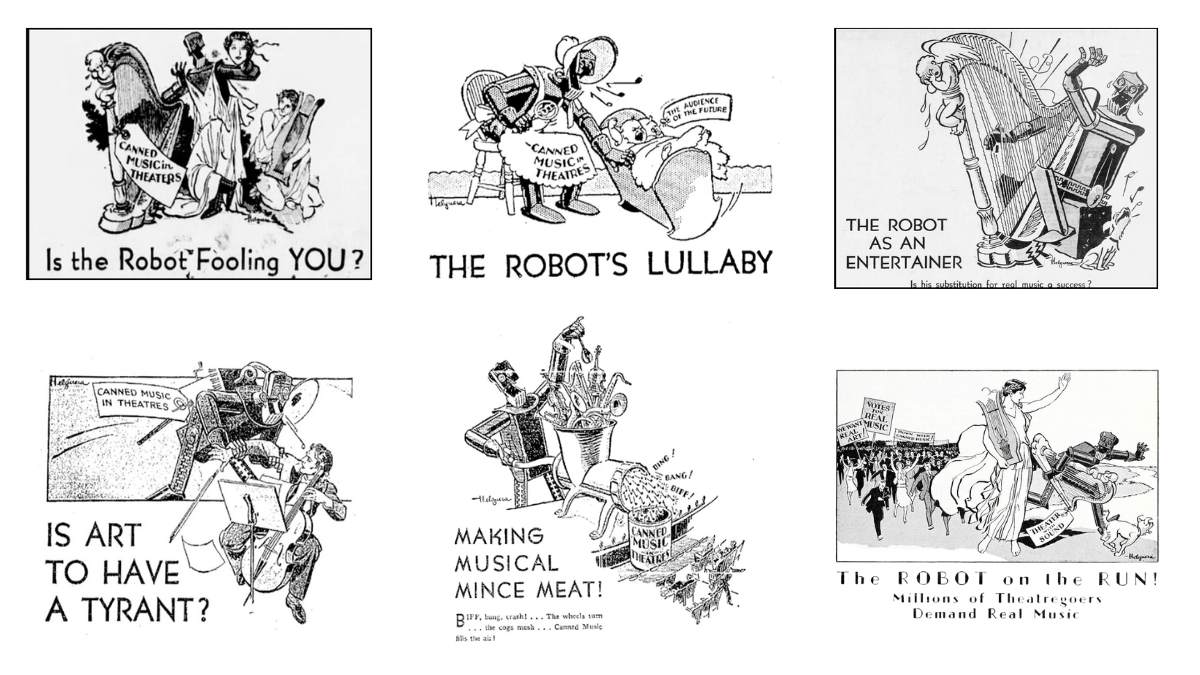

In 1930, the American Federation of Musicians established a new organisation named the Music Defense League. They initiated harsh advertising campaigns to fight the spread of what they believed was a dreadful threat: recorded sound, or as they termed it, “canned music.”

It’s hard not to notice the similarities.

AI is making waves across various sectors, including business, product development, technology and creativity. AI’s impact extends to the music industry itself with applications ranging from AI-generated music to innovative tools for music operations and royalty distribution – and that would be our cue.

With new AI laws on copyrighted music in Europe and neverending industry conversations, we felt like it was time to launch an initiative to examine AI’s impact from every angle. In other words, we’ve started a company-wide effort to explore how AI is reshaping the music industry.

The plan is to ride the AI wave, cut costs and boost productivity while shifting our focus to more strategic human endeavours, like the rest of the planet, but ours is oriented towards the music industry, because that’s our bread and butter. At the same time, we aspire to elevate our standards to ensure every artist has the best chance of getting paid. That’s been our thing since day 1.

This is how we founded the BMAT AI Defense League (sadly not the AI Musical Justice League), a cross-functional in-house division dedicated to driving innovation across several sectors. We’re leveraging AI to fuel growth, optimise business workflows, streamline development processes, and push the boundaries of digital innovation through research and product development. And a few other things, but we’ll leave those classified for now.

We’ll disclose more as we go, so keep an AI on us.

Latest articles

July 11, 2024

Decoding voice cloning and recognition – an AI introspective

Ethical concerns emerge around the extent of manipulation and the privacy implications involved in the advancing use of voice data. The riddle we're tackling is whether the evolving use of [...]

May 15, 2024

Partnering with Voice-Swap to establish a certification program to verify datasets used in AI music models

Ethical voice cloning platform Voice-Swap and BMAT have forged a groundbreaking partnership to establish a certification program for AI music models. The certification will harness the powe [...]

May 9, 2024

Acquiring MMS from Media Consultants to amplify advertising intelligence and global reach in Italy

AD Control of BMAT Music Innovators has acquired a majority stake in Metro Media System (MMS) from Media Consultants, marking a strategic leap toward amplifying the Italian advertising inte [...]