Music detection, deep learning and our deep music detector.

The definition of music is usually quite abstract, touching on the concepts of pitch, rhythm, and timbre.

When we say that a sound has a particular pitch, it means that we can easily identify if the sound is high or low. Imagine you’re sitting in a recording studio and the guitarist is strumming a few notes – he or she is making pitched sounds. But the air-conditioner droning next room over is not.

Then there’s rhythm, referring to how sounds are sorted in time; and timbre, known as the ‘colour’ of sound, which allows us to tell the difference between the sound of a trumpet and a piano.

“Any sounds in any combination and in any succession are henceforth free to be used in a musical continuity.”

Under the definition of an organised set of sounds with these characteristics, a ringing phone, the siren of an ambulance, the horn of a car or a bird’s chirp could be considered music. Other definitions include that music should convey emotions or ideas. We are not sure if the sounds mentioned above do that, but they certainly carry a message.

Actually, we believe that the definition of music is much simpler in that any sound can be considered music if it was created with the purpose of being music. Or, in the words of the French composer, Claude Debussy: “any sounds in any combination and in any succession are henceforth free to be used in a musical continuity”.

Music Detection

We haven’t got round to creating a musical-purpose detector. What we do have is a pretty good detector of musical sounds – sounds that carry pitch and/or rhythm – which was crowned the best music detection algorithm at the 2018 MIREX competition, a contest where all the best algorithms related to Music Information Retrieval are evaluated.

Music is our passion and obsession. We love listening to music – as I’m doing writing this post – and most of us even play in bands or run small indie labels in our free time. We want to know where music is playing everywhere and at all times. And this is the main task of our Music Detection algorithm: the Deep Music Detector (DMD).

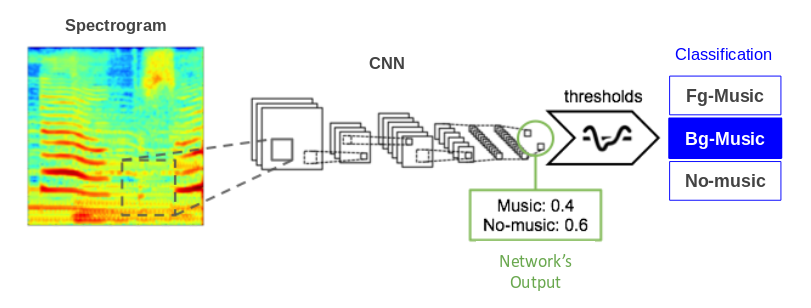

DMD is a convolutional neural network trained to identify what proportion of the loudness in an audio segment belongs to musical and non-musical sounds. Using a set of thresholds, we transform this proportion into one of three classes: foreground music, background music or no music. This neural network has listened to several million seconds of audio from broadcasters all around the world so it has succeeded in learning to detect the presence of music in almost any genre.

DMD monitors more than 4300 radio stations and TV channels 24/7. It is used for several different tasks but among the most relevant, there is (1) the calculation of the percentage of music played in each channel for tax-paying purposes and (2) the detection of music that hasn’t been identified by our audio fingerprinting technology which can later be ingested into our database for future detection and (3) the classification of identified music either played in the foreground or background.

DMD is the result of our first attempt at using Deep Learning for the task of Music Detection and it has already proven better than any of our previous algorithms. Day after day, we are working on incorporating the latest advances in the field to offer our clients the best possible Music Detection algorithm.

Written by Blai, Research Engineer

Latest articles

September 10, 2024

What to do when AI crashes into your party

On the 1st of August 2024, the EU AI Act, unanimously approved on May 21, 2024, came into effect, delivering the world’s first AI law which clearly calls for the need for AI companies [...]

July 11, 2024

Decoding voice cloning and recognition – an AI introspective

Ethical concerns emerge around the extent of manipulation and the privacy implications involved in the advancing use of voice data. The riddle we're tackling is whether the evolving use of [...]

May 15, 2024

Partnering with Voice-Swap to establish a certification program to verify datasets used in AI music models

Ethical voice cloning platform Voice-Swap and BMAT have forged a groundbreaking partnership to establish a certification program for AI music models. The certification will harness the powe [...]