Audio fingerprinting is the process of digitally condensing an audio signal, generated by extracting acoustic relevant characteristics of a piece of audio content.

Our motto is “We hear everything everywhere and tell everyone who wants to know.” Something we don’t mention so often but we feel equally proud of is how we do it: audio fingerprinting. It’s literally our identity sign, the system that allows us to identify 52 years of audio against 72M sound recordings every day.

Tempo is a distinctive feature in music. In some genres like classical, it will help an expert distinguish between Glenn Gould’s performances of Bach’s Goldberg Variations in 1955 and 1981. They sound alike but there are slight differences (in the timbre, the texture and the flow from one movement to another) that make them two different sound recordings.

On the other hand, when a DJ plays electronic music in a club, tempo changes are applied to the original recordings via effects like scratching or time-scaling. They definitely affect the way we dance and feel but most of the melodies remain identifiable. The tempo is different but the sound recording stays the same.

If you needed to design a unique representation of a song, you’d have to take into account elements like timbre, intensity, melody, tempo… So if you designed it just for classical, it would strictly capture tempo as any subtle variation could imply a different performance. However, for electronic genres, there would be less attention needed for tempo and more on capturing the timbre and melody, as the very same song played at different speeds still plays the same sound recording. This is a challenging trade-off to solve and it’s connected to a more complex question: when are two sound recordings the same? How do you define the exact boundary where one song becomes a different one?

It all depends to what extent we apply these effects. Sigur Ros’ Stáralfur shows the impact that sound effects have on a song.

Let’s first take a look at the original Starálfur.

Now, listen to it played four times slower. Again, can we say it’s the same song? Well, even though it’s the same score, Sigur Ros considered it to be a different song, so published it with a different name: Avalon.

Tempo is the example we chose but many other sound transformations (distortion, compression echo, noise masking… ) can alter the perception of music. When applied at very low scales, the effects are barely audible. But when they’re less subtle there’s a point at which the original recording isn’t itself anymore, like Avalon and Stáralfur.

“How do you define the boundary where a song becomes an entirely different one?”

When it comes to identifying music on a large scale, we need to find out which exact attributes make a song unique and that when altered will turn the song into a different one. The tool we’ve chosen for this is audio fingerprinting technology.

Audio fingerprinting

An audio fingerprint is a condensed digital summary of an audio signal, generated by extracting acoustic relevant characteristics of a piece of audio content. Along with matching algorithms, this digital signature permits to identify different versions of a single recording with the same title.

When a song is ingested in our database, we automatically generate and store its fingerprint which we’ll later use to identify the audios we record across TVs, radios and venues. Just like with human fingerprints we can compare a recording fingerprint against a global database of songs and locate its match within seconds.

“A match requires the recorded audio to be almost identical to the registered song”

But as every recording is unique, identifying an audio (matching it with its fingerprint and metadata) requires it to be almost identical to the stored and fingerprinted song. Not strictly identical because a good fingerprinting technology must be robust against certain sound degradations and needs to detect when the song isn’t itself anymore.

We believe human perception determines this boundary and we train our technology to develop the same sensitivity so that when we humans cannot recognise a song, our matching algorithm doesn’t identify it either.

We are family

There are several scenarios where music identification presents certain particularities. We optimise our fingerprinting for each use case, so that it’s as accurate as possible and at the lowest computational cost. This is how we made an entire fingerprinting family.

Starting from the basics, identifying an audio in an ideal scenario just like its original recording, is the simplest requirement for a fingerprint. That’s what FFP (fast fingerprint) does. You can call it the stupid kid everyone loves or the indispensable grandparents.

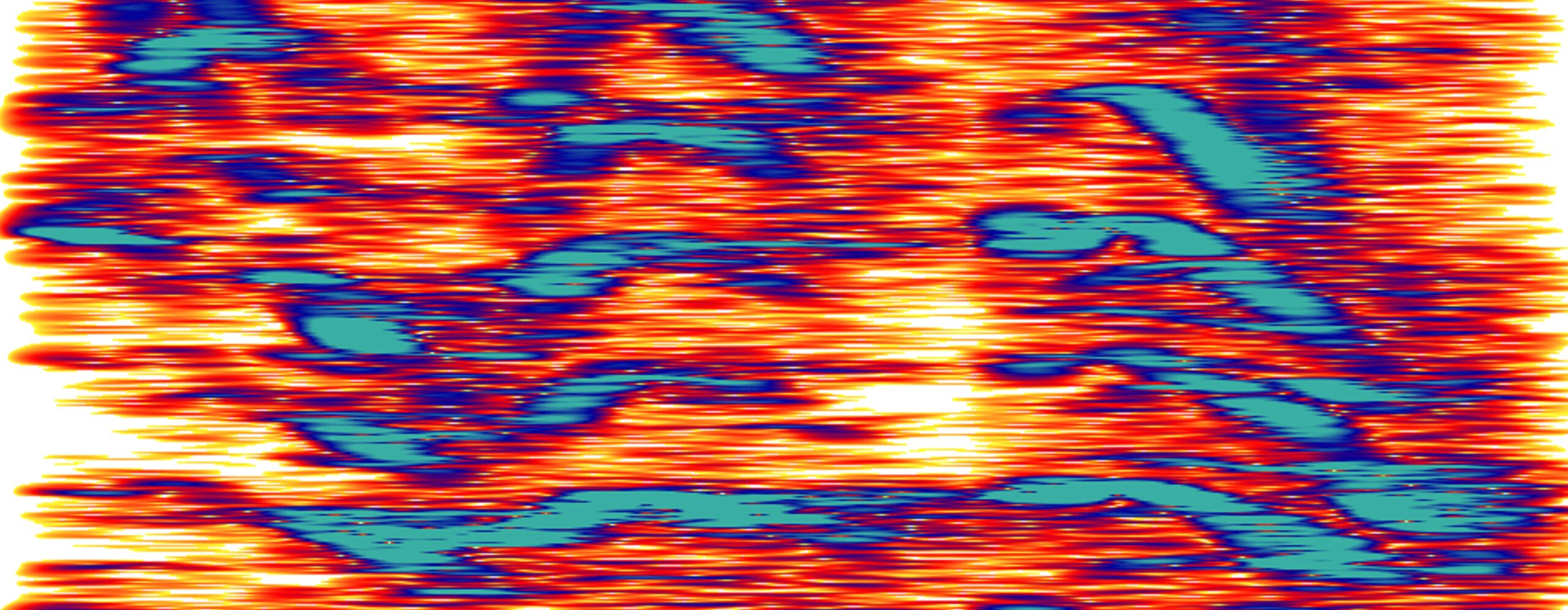

Fragment of the spectrogram of all BMATers saying Music Innovators. A spectrogram is the basis for our audio fingerprinting.

However, music isn’t normally played under ideal circumstances. Not even on radio stations where effects like dynamic range compression standardise the quality of sound. Our standard fingerprint is our breadwinner. Very light and agile, it’s more tolerant and returns positive even when there are slight modifications of time, pitch, voice over….

After binge watching TV, we found out that one of the most common sound artefacts – and most disturbing for a music identification system – is foreground noise. This fingerprinting developed a great skill to pay attention to background music, no matter how low the volume is. It won’t surprise you to know that it’s the heaviest and most expensive fingerprinting in terms of computational cost.

“Sound artefacts are very frequent, either because of sound effects or the circumstances under which the song is being played”

With very different life choices, BMAT’s party animal has the most refined hearing. HPCPFP: the harmonic pitch class profile fingerprint returns true when the two songs we are comparing have highly similar harmonic and predominant pitch structures, we use that for detecting covers, live, edits…

These are the primary areas that have kept us busy so far but we keep on researching and experimenting to find efficient new solutions to the challenges that music presents us. The family will continue to grow.

Written by Brais, Head of Comms

Latest articles

April 5, 2024

A super-brief introduction to Music AI for non-engineers and newbies alike

AI music is music that is composed, produced, or generated with the assistance of artificial intelligence (AI) technologies. AI-generated music platforms are like smart synthesisers that ma [...]

March 20, 2024

Collaborating with RTP to champion fair compensation from public TV broadcasting for the Portuguese music community

RTP – Rádio e Televisão de Portugal, the Portuguese public broadcaster, has started a multi-year partnership with BMAT Music Innovators to enhance and automate music reporting process [...]

December 4, 2023

Joining forces with the Japan Music Copyright Association (JASRAC) to enhance the identification accuracy of JASRAC-managed songs on YouTube

JASRAC – Japan Music Copyright Association – has joined forces with BMAT Music Innovators to enhance the identification accuracy of JASRAC-managed songs on YouTube. Accurate identification [...]