We gave Deep Learning the chance to challenge our classifier and identify what is music and what is not.

You never know when you’ll close the loop. After a brief encounter with Neural Networks, Àlex decided to go find an algorithm somewhere else. Two decades later they met again and became pals. Now called Deep Learning, Neural Networks showed up last year at BMAT to help us solve one of our biggest challenges – how to distinguish what is music and what is not.

In 2001 I was part of a team that was working on a singing synthesizer that later became a pop star in Japan. Amongst other duties, I had been given the mission to come up with a robust automatic voice-unvoiced gate that could help us speed the segmentation of a cappella recordings into phonemes. I spent several weeks using Weka – a machine learning java application – to train a Neural Network. This Neural Network was given hundreds of previous manual segmentations as examples and was trained under all sorts of different possible configurations. The results were amazing – I couldn’t manage to get it working. I didn’t even get closer to the results obtained with the simplest possible algorithm – an if/then based on the spectral centroid.

“My conclusion at that time was that Neural Networks was probably a good name for a band but unfit for signal processing.”

My conclusion at that time was that Neural Networks was probably a good name for a band but unfit for signal processing. This article is about how it took almost 20 years to turn around the tortilla – as we say around here – and sees me surrender to the power of Neural Networks, or as it likes to be called today, Deep Learning.

Marvin Minsky, MIT founder.

Neural Networks are based on the McCulloch-Pitts neuron model (published in 1943) where a number of inputs are weighted and added up to trigger an output signal – as shown in the figure below. Soon after its publication, different computation techniques based on this artificial model started to appear. They grouped sets of neurons in different architectures and sophisticated the arithmetics involved. The scientific community at that time considered the results promising and Neural Networks discipline gained progressive popularity and momentum along the 50’s and the 60’s.

A McCulloch-Pitts neuron.

That went on until 1969, when Marvin Minsky and Seymour Papert (founder and director of the MIT respectively) published Perceptrons. The book concluded that the multi-layered neural networks – which was considered to be the most promising and natural evolution of the discipline then – would take far too many iterations to learn and far too much time to compute. After the book, scepticism took over, funds went missing, and another supervised learning method called Support Vector Machine became the new celebrity. Neural Networks went silent, left the building and grew a beard.

It was not until 2006 that Geoff Hinton, Osindero and Teh published an article showing how multi-layered neural networks could effectively be trained. The paper referred to learning for deep belief nets, i.e. Deep Learning. Neural Networks was back, perfectly shaved, and ready to burn the house down. Just like Nirvana’s second album Nevermind, Neural Network’s second coming Deep Learning, turned out to be a classic.

Lee Sedol moving. European champion Fan Hui said this about AlphaGo 37th move: “It’s not a human move. I’ve never seen a human play this move. So beautiful.”

From then on Deep Learning proved unstoppable and perfected at accelerating speed. In 2011 a Deep Learning based software achieved for the first time superhuman performance in a visual pattern recognition contest. In May 2016 AlphaGo – Google’s Deep Learning Go player – defeated world champion Lee Sedol. And in December 2017 AlphaZero – Deep Learning Chess player – beat the most powerful Chess program till then after teaching itself in four hours – playing against itself using reinforcement learning. What?!

“We gave Deep Learning the chance to challenge our music / non-music classifier. First Deep Learning results we obtained proved better than any of our previous algorithms.”

That’s right, AlphaZero didn’t learn from previous human Chess masters’ moves, it learned alone, and ignoring humans turned out to be the software’s smartest move. Not only did this save enormous amounts of time in training but it then also performed better than any other. As Max Tegmark – Professor at the MIT and Scientific Director of the FQxI – liked to put it : “the shocking news here isn’t the ease with which AlphaZero crushed human players, but the ease with which it crushed human AI researchers, who’d spent decades hand-crafting ever better chess software”.

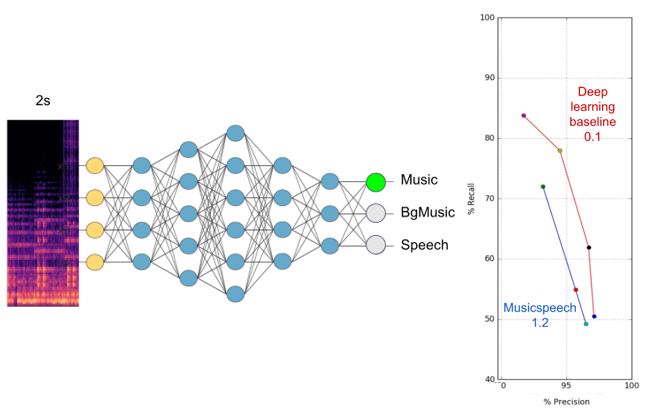

Left: representation of our Deep Learning, from spectrogram to a three state classification. Right: recall vs. precision for our Deep Learning classifier, compared against previous version.

At BMAT we have experienced something similar. After three years of trying different ground truth corpus, different training strategies and different algorithms we had what we believed was the best music / non-music classifier we could come up with. Last year we decided to review the use of Artificial Intelligence in the company. We wanted to rethink our practices with regards to where and how to use it. As part of this process, we gave Deep Learning the chance to challenge our classifier. First Deep Learning results we obtained proved better than any of our previous algorithms. Now we are training a proper artificial CEO.

Written by Àlex, CEO

Latest articles

April 5, 2024

A super-brief introduction to Music AI for non-engineers and newbies alike

AI music is music that is composed, produced, or generated with the assistance of artificial intelligence (AI) technologies. AI-generated music platforms are like smart synthesisers that ma [...]

March 20, 2024

Collaborating with RTP to champion fair compensation from public TV broadcasting for the Portuguese music community

RTP – Rádio e Televisão de Portugal, the Portuguese public broadcaster, has started a multi-year partnership with BMAT Music Innovators to enhance and automate music reporting process [...]

December 4, 2023

Joining forces with the Japan Music Copyright Association (JASRAC) to enhance the identification accuracy of JASRAC-managed songs on YouTube

JASRAC – Japan Music Copyright Association – has joined forces with BMAT Music Innovators to enhance the identification accuracy of JASRAC-managed songs on YouTube. Accurate identification [...]